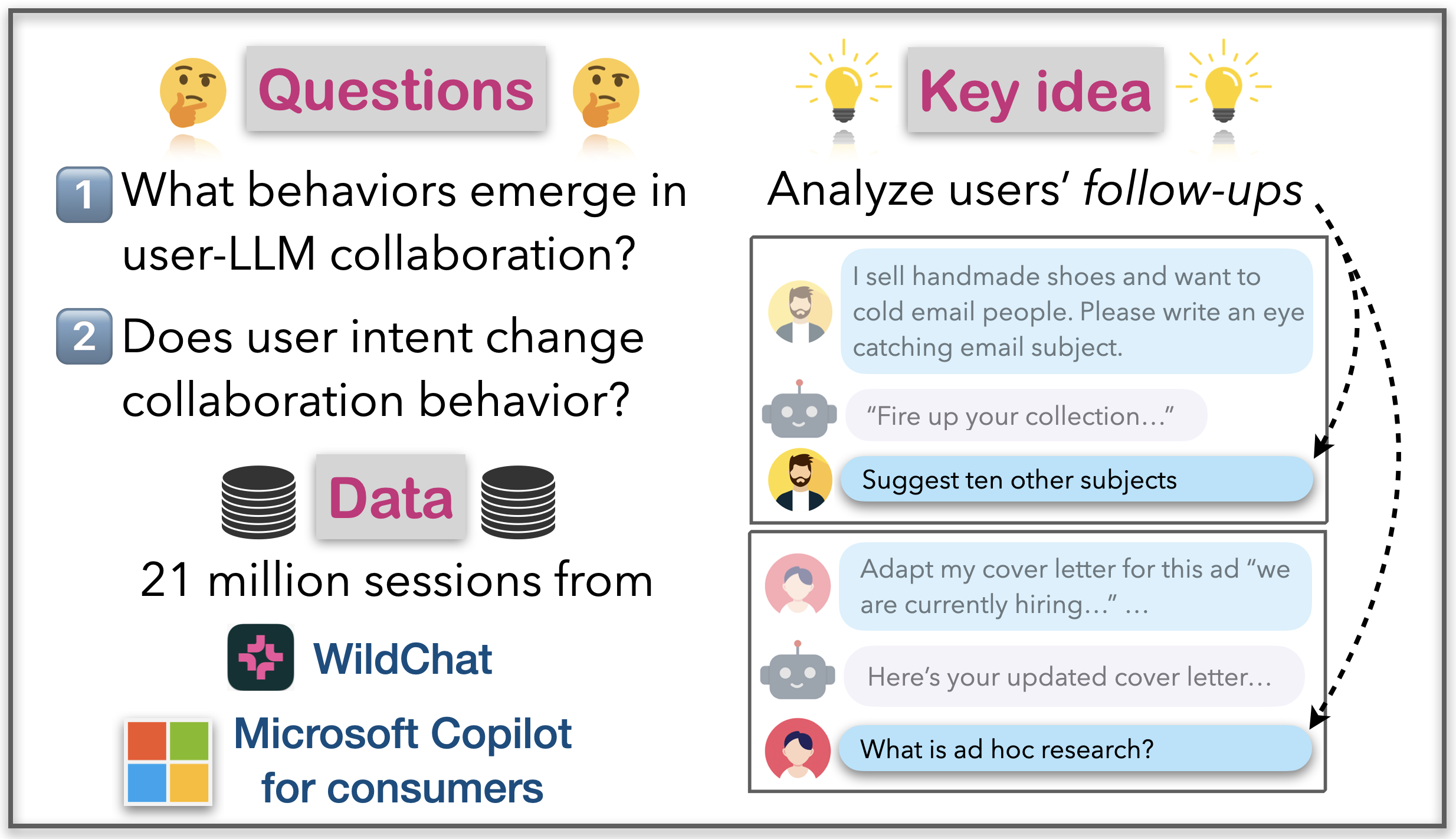

What does it mean to collaborate with LLM-powered Copilots? A common metaphor is to think of LLMs as “assistants” – users supply a prompt specifying their task, the LLM responds, and users leave with the response in hand. Instead, we shifted our metaphor to LLMs as “collaborators” and asked what it means to collaborate with an LLM. To explore this, we analyzed English multi-turn sessions from 21 million Microsoft Copilot for consumer logs as well as public WildChat logs, which reflect real-world user–LLM interactions.

Recent papers have taken the “assistant” metaphor and examined the tasks users initiate with AI tools such as Claude, Microsoft Copilot for consumer, and WildChat (e.g. coding, search, writing, etc). Instead, we analyze users' follow-ups after making a request to the LLM because they capture how users collaborate with the LLM. We ran our analysis only on writing tasks to keep the analysis manageable.

We asked two questions in our analysis: (Q1) What user behaviors emerge in user-LLM collaborations? (Q2) Do users collaborate differently based on their writing intent – for example, when composing an email versus writing a story?

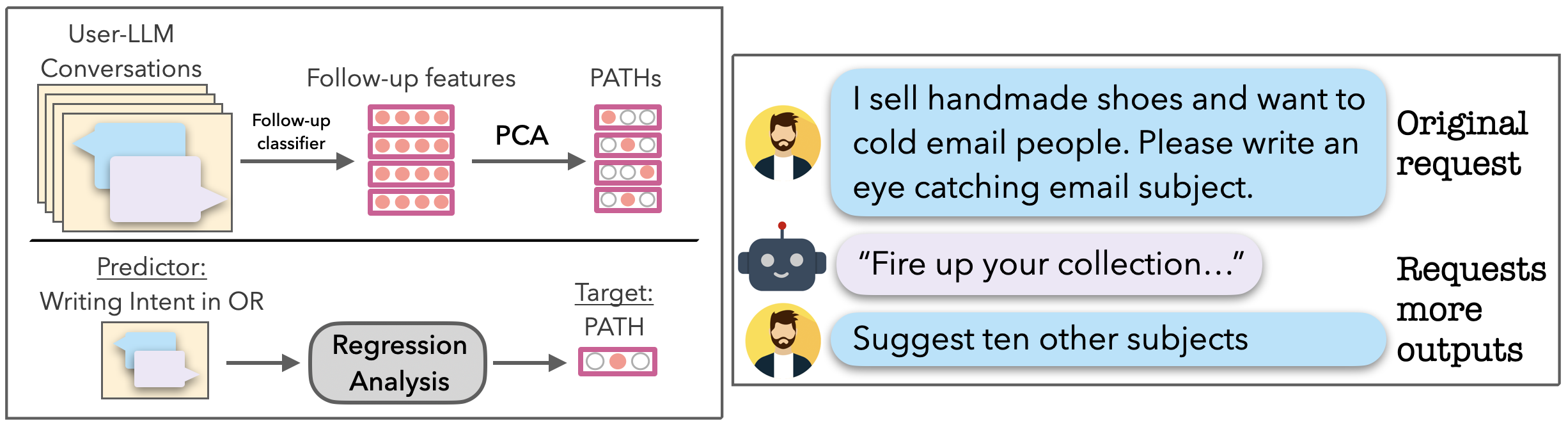

We addressed the first question by using an GPT-4o classifier to differentiate between users’ follow-ups and their original requests (below example). Then we clustered follow-ups into a small set of clusters that represent Prototypical Human-AI Collaboration Behaviors (PATHs). To answer our second question, we used another GPT-4o classifier to identify the writing intent behind each original request, and then ran a regression analysis to explore how these intents correlate with different PATHs. Our paper covers the classifiers and analysis in more detail!

We found that eight PATHs explained 80-85% of the variance in our large-scale log datasets. These PATHs corresponded to users asking questions, requesting more responses, and adding large chunks of text to the response, among others. Our paper describes the complete set of PATHs.

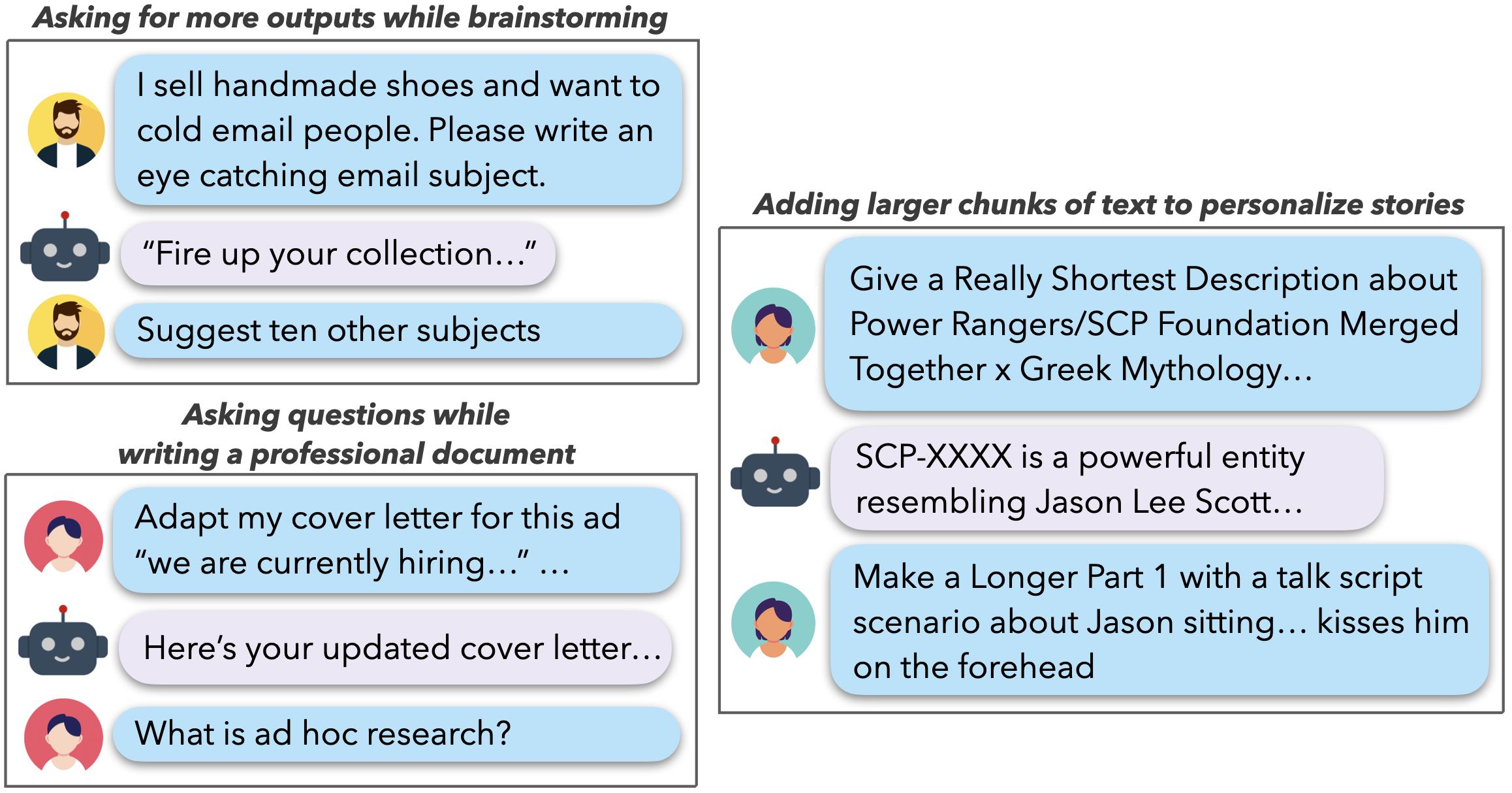

Most interestingly, we found correlations between specific writing intents and PATHs (examples below). For example, users frequently requested more responses when brainstorming. Similarly, they asked questions while writing technical or professional documents. In doing so, users combined tasks that researchers have traditionally treated as separate — search and writing. They also added large chunks of text to personalize generations, such as a speech or story they wanted creative control over. Our paper describes several such correlations and highlights under-explored research problems that warrant further investigation in future work.

Our results have implications for session-level LLM alignment and alignment under uncertain or evolving feedback, but we'll highlight a single key implication here — LLMs as "thought partners."

In asking questions, seeking feedback, and brainstorming with LLMs, users treated LLMs as partners in longer-term learning. Aligning LLMs for long-term learning represents a compelling opportunity, and we echo recent calls to develop LLMs as thought partners. This goal will require that alignment research prevents the homogenization of human voices and ideas and incorporates some of the desirable difficulties known to be crucial for learning.

Our paper discusses all the details and cites several recent papers in line with our findings and implications. Please take a look!

@article{mysore2025prototypical,

title={Prototypical Human-AI Collaboration Behaviors from LLM-Assisted Writing in the Wild},

author={Mysore, Sheshera and Das, Debarati and Cao, Hancheng and Sarrafzadeh, Bahareh},

journal={arXiv preprint arXiv:2505.16023},

year={2025}

}